Spark is written in Scala with programming interfaces in Python (PySpark) and Scala

- make sure Java with JDK is installed

- install Scala with IDE (IntelliJ)

- setup sbt (build tools for Scala)

- setup

winutils.exe(required for HDFS APIs to work on Windows laptop) - download spark binaries and untar them

cf:

- Setup Spark Development Environment – IntelliJ and Scala

- Apache Spark in 24 Hours: Hour 2, Installing Spark

安装JDK

- 选择奇数版本(修复bug后的版本)

- 不要安装在program file里面,路径不要含有空格

- 环境变量增加

JAVA_HOME为jdk的路径,cmd(以管理员身份运行)中输入

setx /M JAVA_HOME C:\java\jdk1.8.0_211

- 重启cmd测试

echo %JAVA_HOME%

- cmd(以管理员身份运行)中输入

setx /M path "%path%;%JAVA_HOME%\bin;C:\java\jre1.8.0_211\bin"

- cmd测试

javac -version

- disable IPv6 for Java applications

setx /M _JAVA_OPTIONS "-Djava.net.preferIPv4Stack=true"

cf: How to set JAVA_HOME in Windows 10

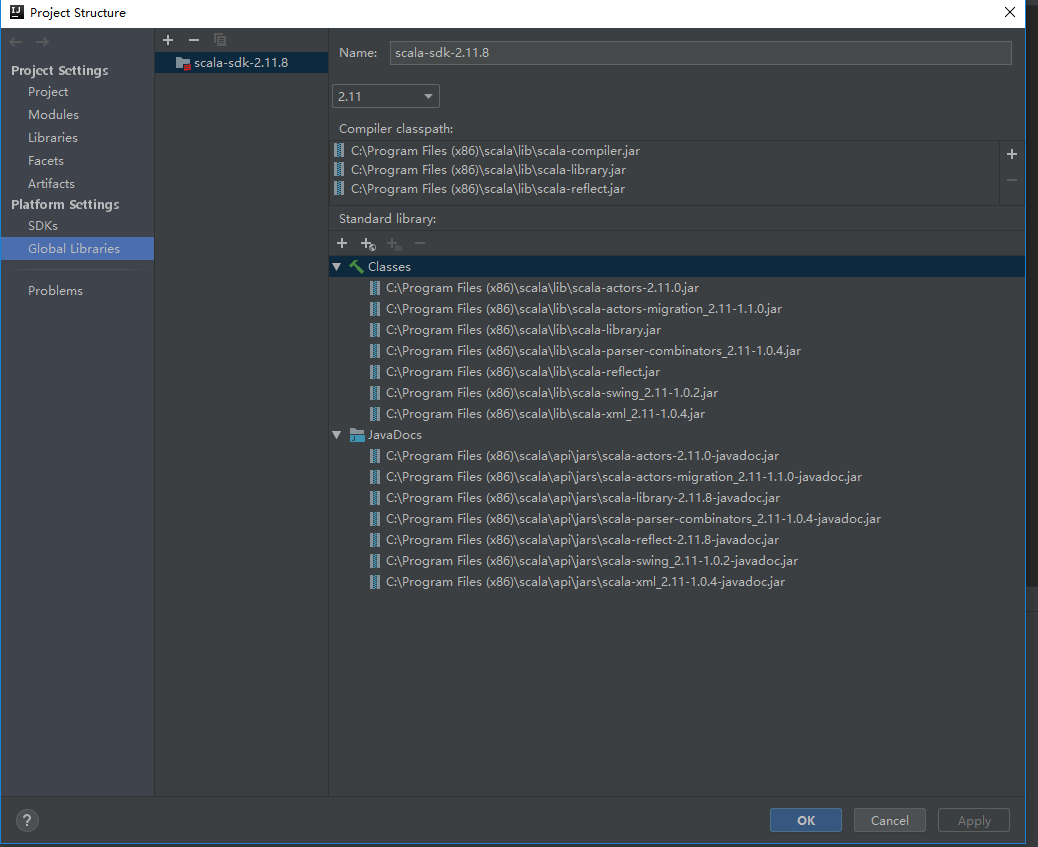

安装Scala, SBT和IntelliJ

- 下载scala安装,link

- cmd验证(Ctrl+D退出)

scala

- 下载sbt安装,link

- cmd验证(Ctrl+D退出);cmd中输入

console也可进入scala interpreter

- cmd验证(Ctrl+D退出);cmd中输入

sbt

- 下载IntelliJ IDEA Community版本安装

- 创建一个Scala项目,参考 BUILDING A SCALA PROJECT WITH INTELLIJ AND SBT

项目的结构

.idea= IntelliJ configuration filesproject= files used during compilation. For example,build.propertiesallows you to change the SBT version used when compiling your project.src= scala source code- structure:

src/main/scala

- structure:

target= when you compile your project it will go herebuild.sbtname- name of the projectversion- project versionscalaVersion

idea右键没有Scala class的解决方法:

cf: idea 无法创建Scala class 选项解决办法汇总

安装Hadoop

- 下载,解压

- 环境变量增加

HADOOP_HOME为hadoop路径:以管理员身份运行cmd输入

setx /M HADOOP_HOME C:\hadoop-3.1.2

- bin文件夹加入环境变量path:以管理员身份运行cmd输入

setx /M path "%path%;%HADOOP_HOME%\bin"

- cmd中测试

hadoop version

- 下载winutils,link,替代对应版本的hadoop的bin文件夹

安装Spark

- 下载Pre-built for Apache Hadoop版本 link,解压,路径加入path:以管理员身份运行cmd输入

setx /M SPARK_HOME C:\spark

setx /M path "%path%;%SPARK_HOME%\bin"

- cmd验证

pyspark

- 打开 http://localhost:4040 查看SparkUI

- cmd验证

spark-shell

打开 http://localhost:4040 查看SparkUI

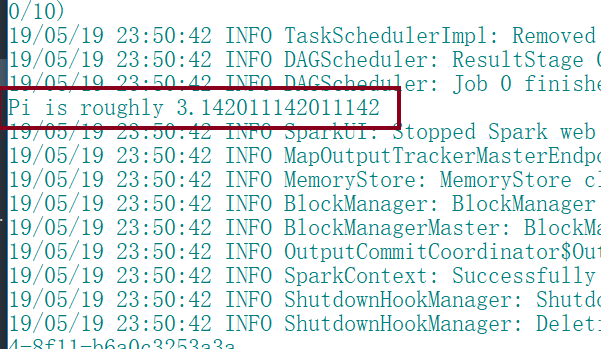

Pi Estimator example

spark-submit --class org.apache.spark.examples.SparkPi --master local %SPARK_HOME%\examples\jars\spark-examples*.jar 10

- make a temporary directory,

C:\tmp\hive, to enable theHiveContextin Spark. Set permission to this file using thewinutils.exeprogram included with the Hadoop common binaries

mkdir C:\tmp\hive

%HADOOP_HOME%\bin\winutils.exe chmod 777 /tmp/hive